Feature Integration Theory is a theory that explains human object recognition using visual attention and assumes two separate information processing stages. The first phase is an automatic process and happens so quickly that we do not notice it. Here we automatically process basic visual features of an object such as color, orientation, shape, or whether it is moving or not. Only in a second phase are the features combined so that we perceive the entire object and not just individual features. This second phase needs our attention and runs much slower than the first automatic process. Knowledge of such cognitive processes can help us as designers, especially (but not only) in critical areas, to design objects in such a way that they can be found quickly through the so-called pop-out effect.

In our everyday life we spend a lot of time searching for certain things or objects. For example, we are looking for our friend at a concert. We know she has blonde hair and a red sweater on. So we limit our search for it to these characteristics. In psychology, we would call the people with blond hair and a red sweater a “target stimulus” or simply a “target.”

Visual search is a complex perceptual or attentional process and is concerned with the problem of how to find objects that are relevant or of interest to us in a complex environment – that is, in a world full of objects and things that might also be irrelevant to us. In psychology jargon, the irrelevant objects are so-called “interfering stimuli” or simply: “distractors”.

The process of visual search is necessary and very important for our daily life. Imagine if we had to process all the information that is in our visual field at once. This is already impossible due to our limited cognitive capacity. Visual search is also very important for interface design. For example, we could quickly search for a specific app on our smartphone.

Inhaltsverzeichnis:

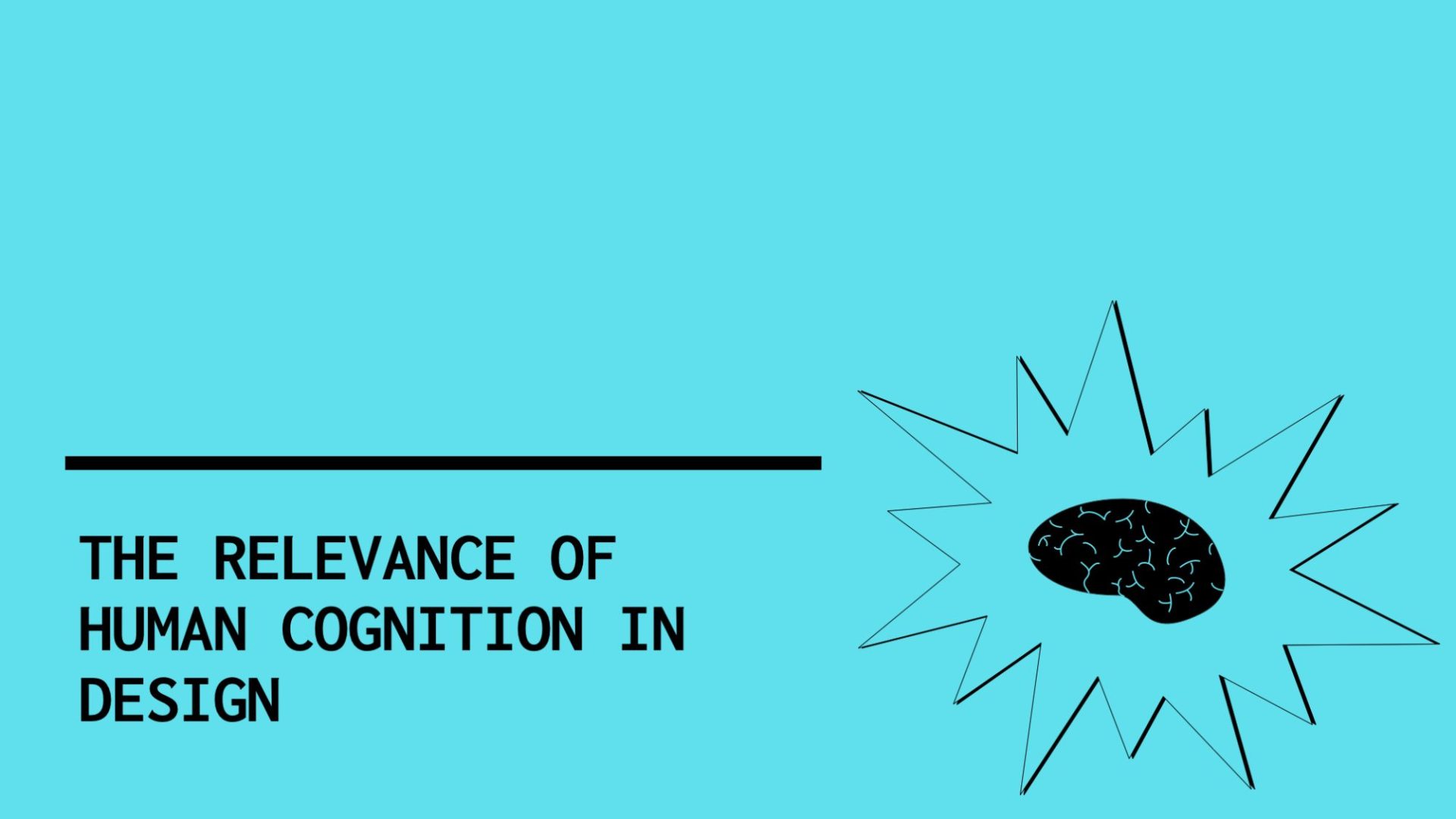

An example from everyday (digital) life

I myself very often accidentally tap on the wrong app icon when I want to open the Face Time app. This happens when we try to find a specific app, but several apps have the same color feature.

The Feature Integration Theory

The Feature Integration Theory of Anne Treisman and Garry Gelade (1980) offers us an explanatory approach to this phenomenon. According to this theory, we perceive objects in two steps.

First, basic visual features such as color, motion, or the orientation or shape of an object are recognized and processed automatically and therefore very quickly (so-called pre-attentive stage, since no attention is required of us here).

However, the combination and integration of these individual features of an object towards the perception of the object as a whole (also called “conjunction”) is a slower process that requires our attention. This stage(attentive stage) needs more time than the first automatic process because of the conscious information processing.

Using the above example, this means: I have a lot of green apps on my display. Color perception happens very quickly and unconsciously (pre-attentive stage), which explains the typos. Only in the second step (attentive stage) I perceive “finer” details like the specific icon (camera, arrows etc.) in combination with the color and my brain forms a whole “Face Time Icon” or “Whatsapp Icon” out of it. We – or our brain – integrate or “conjugate” all features of the object to a whole.

The classic experiment

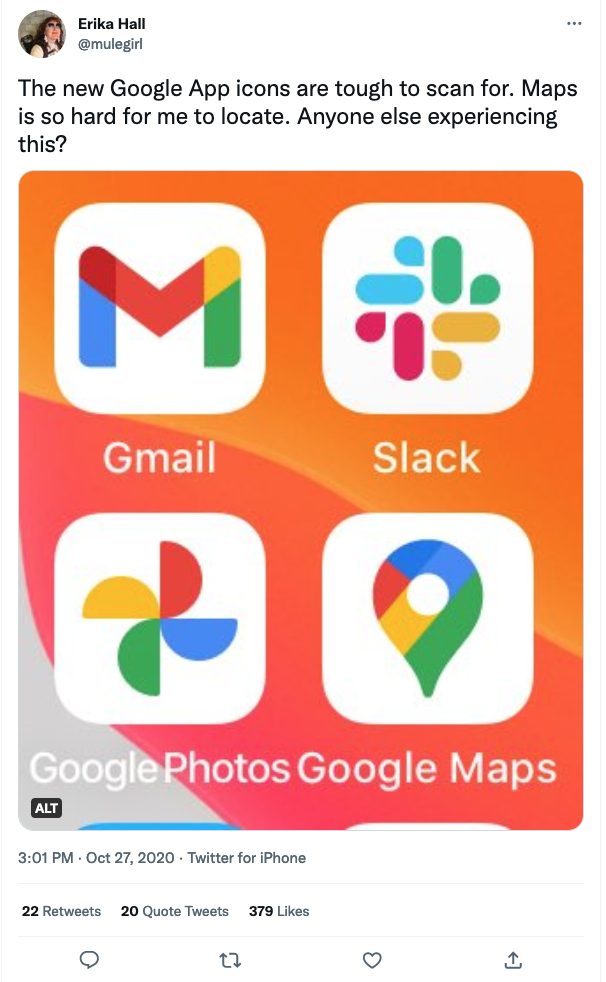

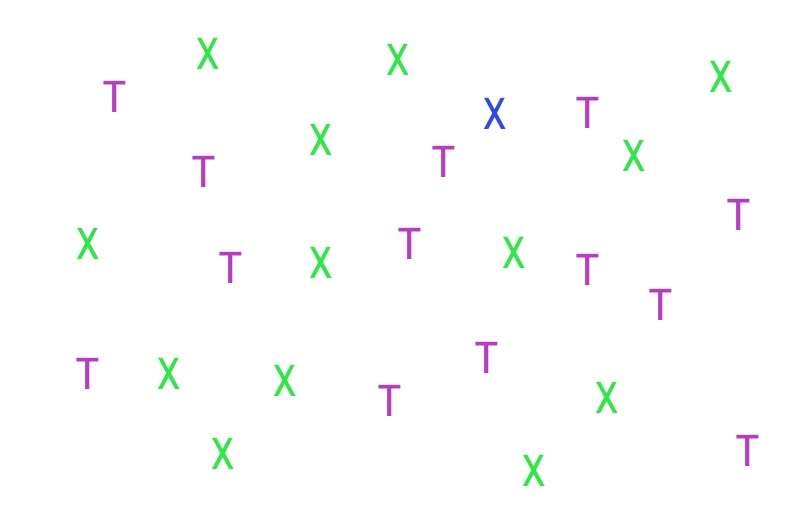

Let’s try it with the classic experiment adapted from Treisman and Gelade (1980): Try to find the blue “X” in the following graph. The blue “X” is our target stimulus, which is surrounded by so-called “interfering stimuli” that are irrelevant to us.

Ok, that was very simple, wasn’t it?

We can also try the same thing again with several disruptive elements:

We can see that our blue “X” “pops out”(pop-out effect) even when there are several interfering elements, so we can recognize it very quickly. We look at all the objects at once and can still spot the blue “X” super fast among all the other distracting elements. This happens so quickly because we use the automatic parallel processing mentioned above: We simply look at the objects that are within our visual field and can perceive them all in parallel, and our target stimulus “jumps” out at us because of its unique propertywhich it does not share with any of the other objects in the environment – the blue color – directly into the eye.

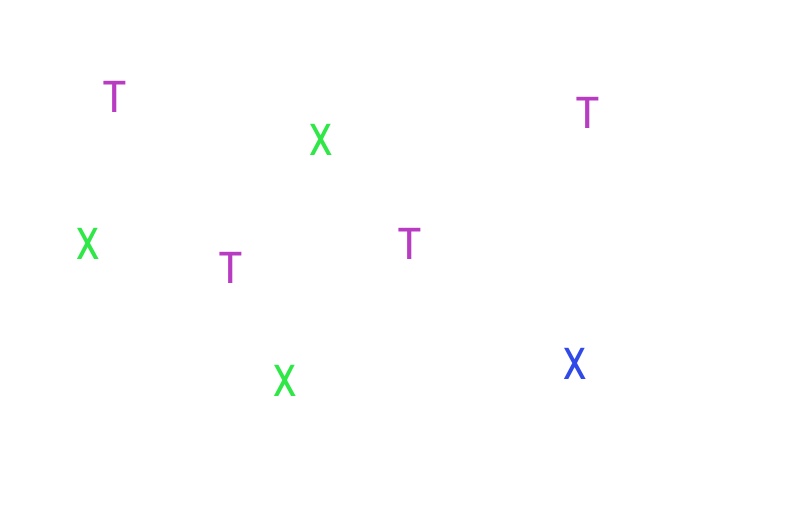

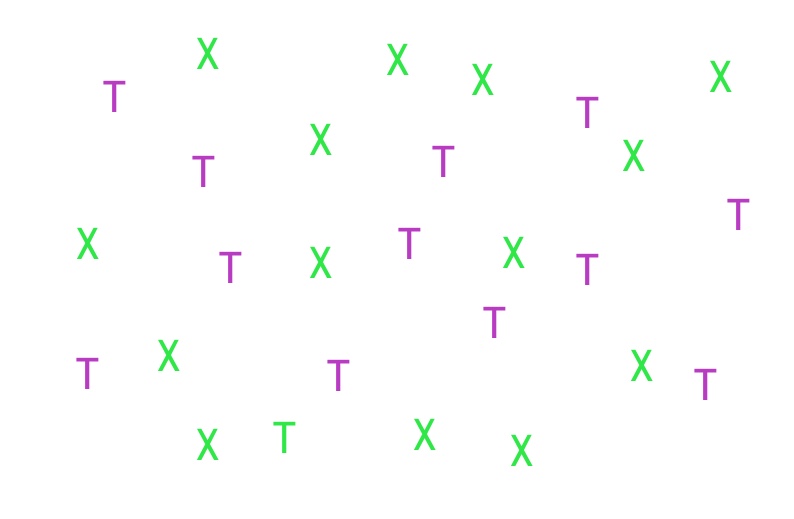

Let’s continue with our experiment. We now try to find the green letter “T” in our graph (integration condition):

That probably took longer, didn’t it? Since we are no longer just looking for a unique feature, our target no longer “stands out.” Subsequently, finding the target stimulus appears much more difficult because it is surrounded by other green letters and other “T’s”. Our target stimulus (the green T) shares features with all of our interfering objects: the green color of the letter “X” & the shape with the purple “T”.

Therefore, we need to look closely at each object and focus our attention on it to combine the features (shape and color). So we scan each object, and if it is not our target object , we move on to the next object until we discover our target object. This corresponds to a serial, step-by-step processing or search process. We must examine each object with focused attention. That takes time.

In the first two conditions (“Find the blue “X” – the so-called feature or characteristic condition) the search time is also not influenced by the number of interfering elements. The target object is recognized automatically, unconsciously and thus very quickly at a pre-attentive level due to its unique characteristic/feature “color“. We do not need directed – i.e. conscious attention to recognize our target object, but use fast parallel information processing – and thus parallel search.

However, in the integration condition, the search time increases because we have to focus our attention on the perturbation objects, which leads to slow serial processing and thus serial search. We “graze” each element and check if this has the characteristics of our target object. The search time thus also increases with the number of visible interfering elements.

What does this mean for interface design?

If multiple objects have too many features in common, it can be difficult for us to find what we are looking for or are supposed to find. Feature integration theory provides us with a scientific explanatory approach to consider and justify this in design decisions.

Let’s remember the first example with Google icons: The features are all too similar, they have a lot in common. They share shape and color, both things we perceive quickly and automatically at the so-called pre-attentive stage. This makes it impossible for us to find the right object very quickly at this fast processing level, because we can’t distinguish them that quickly. So we need the slower, attentive level to find our target object – i.e. we first have to scan every single element consciously and check if it is our “target object” – and that takes time.

This can pose a risk in situations where quick action and reaction is required. Think healthcare, transportation, automotive, aircraft, space shuttles, or industry in general. But even with products that are not used in safety-critical environments, we can use insights from cognitive psychology to make people’s lives a little easier.

Sources

- Treisman, A. M., & Gelade, G. (1980). A feature-integration theory of attention. Cognitive Psychology, 12(1), 97-136. https://doi.org/10.1016/0010-0285(80)90005-5